Note: This article is relevant for all versions of TrueNAS and FreeNAS from their initial release of 8.0 back in 2011, but some things like top output will not give you the ARC size output on older versions. ARC wasn’t added to the “top” output until the last 5 years. This will likely work the same for all future versions of TrueNAS Core and Enterprise.

TrueNAS, just like FreeBSD (and virtually every other OS out there) can use swap space. Swap space is disk space that is used for saving data in RAM to disk to free up the significantly faster RAM for other uses that may be in more demand. Swap space is typically only used when the system is pressured to use more RAM than it physically has. For TrueNAS, the behavior is pretty unique compared to a typical FreeBSD system. I’ll explain in more detail below.

Swap is useful in some cases, but it can also come with some downsides. Swap on flash (especially NVMe devices) can be very fast, while swap on spinning disks can be excruciatingly slow. If data in swap needs to be accessed, it can take a fast system and make it excruciatingly slow.

Swap is great if you have a program hogging a bunch of RAM it intends to never actually use again, but hasn’t freed up the RAM. The system will write the data to your disks, and happily go about servicing your workloads without any performance issues. This is a fairly well documented behavior for desktops and many servers, but TrueNAS is a bit different with how things happen to work out. Note that this behavior isn’t really by design, it’s just how it happens to work because of the appliance design of TrueNAS.

On TrueNAS, you have 3 basic categories that all of your RAM will fall into:

1. RAM used by the kernel and ZFS.

2. RAM used by all of your services and tasks. This includes things like SSH, Samba for SMB shares, NFS, Cloud Sync tasks, and even the middleware.

3. Free memory.

You can view the memory usage in the WebGUI from the Dashboard. On the Dashboard, the system knows how much RAM is free and for ZFS cache, and the rest is mathematically calculated to be for “services”. While not an exact science, it’s plenty accurate enough for you to know how your system is using RAM.

From the command line, you can view the cache size with the “top” command. It is lines If you want to see how much RAM you are using for ZFS caching you can use the command “top” and look at the ARC lines (line 5 and 6 in the header). There it tells you the ARC total size.

Normally, as your services need more RAM (or less RAM) the ZFS cache resizes itself accordingly to free up (or consume) more RAM as necessary. But in some cases, things can go wrong. The two biggest reasons I have personally seen are:

1. The system load (particularly the disk accessing) is simply too high and ZFS gets too aggressive with RAM usage, starving out the other services.

2. A service or program that is running has a memory leak. A memory leak is when a program allocates RAM to use, but fails to deallocate it when the data in RAM is no longer needed. This means that the longer the service is running, the service with the memory leak slowly consumes more and more RAM. Netdata was a particularly big problem on FreeNAS and TrueNAS. Other services have had this problem in the past, but as of the writing of this post, I’m not aware of any memory leaks. Generally, over the long term, memory leaks are a problem that almost every project written in C and C++ (although not limited to those languages) will have to deal with at some point. Humans write code, and humans make mistakes.

Netdata services existed in TrueNAS and FreeNAS between 11.1 and 11.3. If you used Netdata during this time, you’d find the gathered information to be a great resource for checking the performance and history of your TrueNAS. Netdata was ultimately removed completely in 11.3 due to there being long-term outstanding memory leaks that would often force TrueNAS and FreeNAS to start using swap space. If netdata wasn’t restarted when it had a memory leak, it would slowly degrade the system to the point of making the system unusable.

On FreeNAS systems, this typically meant writing to spinning disks that were used for your data. This would often mean a massive performance hit for your system if the data needed to be read later.

On TrueNAS systems from iXsystems, this meant writing to your boot device, typically partition 3. This often wasn’t a horrible thing, but definitely could shorten the life of your boot devices over the long term as you often wouldn’t necessarily notice the performance degradation while writing and reading a ton of data to/from your boot device since the boot devices were solid state drives. As these devices were relatively small (sometimes 16-32GB in size) this could result in premature failure of your boot device over the long term. But your performance would often not be significantly affected until things were really bad.

Once you’ve exhausted your swap space, the system will begin killing processes it thinks are not necessary. From my experience this often means SSH and the WebGUI (nginx and things it depends on) are some of the first processes to be killed. Without SSH and WebGUI access things are only going to go downhill as you will no longer have remote access to the TrueNAS for management (or to even reboot it unless you have IPMI access). If your server is in a colo that isn’t local, this could mean even more pain to deal with. I’ve seen people have to fly out of state just to press the power button on a server because it was in a locked colo.

Some processes will not even start because they couldn’t allocate the RAM they needed when they needed it.

Still others will throw bizarre errors that make you think something such as a permission issue exists when there isn’t one.

Now you have a system that is having performance and reliablity issues and you can’t even log into it to figure out what is going on. A power cycle is often the only way to get the system to be responsive again. This is obviously not ideal. How are you supposed to fix the problem if you don’t know what the problem is until it’s so bad you can’t even access the machine to investigate?

The situation is further complicated if you are using swap space on a disk, and that disk suddenly fails. Now the data that is supposed to be in RAM (which should *always* be accessible) but is on a disk that just failed is no longer accessible. This means if/when those processes try to use the data that is on that disk the process will not be able to retrieve that data. This will further destabilize the system.

TrueNAS by default sets aside 2GB of space at the beginning of every data disk in your system for use as swap. In TrueNAS Core 13.0-U6.1 (current as of the date of this post) this setting is in the WebGUI under System -> Advanced -> Swap Size in GiB. In TrueNAS Enterprise, this setting is not available in the WebGUI. Changing this value only changes how much space is set aside when you create a zpool or add disks to a vdev in the future. At the moment you create a zpool or add disks to an existing zpool, this value is used. The system creates a partition of the specified size, and uses it as swap space. A second partition is then created for your data.

Prior to TrueNAS 12.0 (later called TrueNAS Enterprise) used swap space on the boot disks. FreeNAS (now called TrueNAS Core) created 2GB partitions on the data drives by default.

You can also see what your swap usage is with the command “swapinfo”.

So now that we’ve established that using swap space means your system will possibly spiral towards its own demise, we should just disable it, right?

Not so fast. There are consequences to not using swap space.

For starters, if you don’t have swap space, and your system would have started using swap space as things started to get out of control, it means your system will become unstable faster. If you have a TrueNAS HA system, this may be a desired behavior so the system will failover faster and the other node will then take over. You may also want to disable swap space so you don’t prematurely wear out SSD devices and are willing to risk the system crashing sooner if things are not well. Without the swap space to “buy your system more time” before its ultimate failure to perform well for you, it will crash much sooner. You very likely are not going to be aware of any issues with the system before they become serious so buying yourself more time may not really be helpful if you aren’t trying to actively troubleshoot the problem while the system is using more and more swap space. I’d prefer to let the system crash hard, reboot and pick up where it left off than watch it slowly degrade to the point of being useless anyway.

The secret reason why swapspace can save your butt:

If you have bought a lot of hard drives over the years, you’ve probably noticed that even among the same model of hard drive, sometimes two different disks are slightly smaller. Sometimes just a few MB difference. This spells potential disaster in your future if you RMA a disk and your replacement disk is just a few kilobytes smaller. Once the vdevs are created, if your replacement disk is smaller, even just a few kilobytes smaller, it will not fit the rule that replacements must be the same size or bigger than the disk it’s replacing. Naturally manufacturers don’t particularly care if the hard drive is a few kilobytes smaller and will basically tell you that it’s not their problem. If this happens and you did have a swap space setup then the disk can still be used.

You can change the value for the swap space from 2GiB to 0GiB in the WebGUI, start your resilvering operation, then set the swap space back to 2GiB. This makes the partition for your data 2GiB larger since the swap partition will not be created. Assuming your replacement disk isn’t more than 2GiB smaller (which I’ve never personally seen it be more than about 10MB), then you can still use the disk. Now you don’t have a disk you can’t use even after the replacement drive has arrived.

So what if you don’t want to use swap, but you want the 2GiB buffer in case you need to replace a disk in the future? Can we have both?

What about trying to destroy the first partition and move the second partition to consume this unused space after the fact? This is certainly possible. But it is very time consuming, and comes with risks. For this reason, I never recommend going this route. It’s just not worth it for the additional 2GiB of storage space. The risk versus reward will never be there.

But… you can leave the setting in the WebGUI, and choose to disable swap after bootup.

Normally, if you wanted to disable swap space on a FreeBSD based system, the proper way would be to run the command “swapoff -a”. Unfortunately, this doesn’t work on TrueNAS. For some reason iXsystems doesn’t list their swap space usage in the /etc/fstab file, so the “swapoff -a” doesn’t work. I know they used to, but at some point in the past it was changed. But we can use a script to accomplish the same thing anyway!

Here’s how you do this:

Note that this works under TrueNAS Core, TrueNAS Enterprise and TrueNAS Enterprise High Availability (HA) systems as well. You only have to do this once in the WebGUI for it to apply for both nodes for HA systems.

1. Make sure the swap space is still set to 2GiB (or larger if you want even more space to be available in case you need it) in the WebGUI under System -> Advanced -> Swap Size in GiB. Create your zpool (or add your disks) like you normally would for your version of TrueNAS. If you already have a zpool and swap space is allocated, then you’ve already done this step.

2. Save the below script to your data zpool. Normally I create a folder on my zpool called .scripts and put my scripts in there. The dataset name starts with a period, so it is hidden under normal circumstances if you do a directory listing. Make sure that root has execute permissions on the script with the appropriate chmod and/or chown commands. In my case, the file is saved as /mnt/tank/.scripts/disable_swap.sh.

#!/bin/sh #Provided by www.snapzfs.com. #Copyright 2024 by snapzfs.

#This script disables swap for your TrueNAS Core/Enterprise system swapinfo -k | awk 'NR > 1 {print $1}' | while read -r swap_device; do echo "Disabling swap for $swap_device" swapoff "$swap_device" done

Give it proper execute permissions. This probably means you should run the below command:

chown root:wheel /mnt/tank/.scripts/disable_swap.sh chmod 744 /mnt/tank/.scripts/disable_swap.sh

3. Now to see if your work paid off and test it.

Run the below to see what swap you are using:

swapinfo

Run the below to disable all swaps with the script:

/mnt/tank/.scripts/disable_swap.sh

Run the below to verify all swap is disabled:

swapinfo

If that worked, we can proceed with adding it as a cronjob that runs every night to stop any swap usage. You can do a post-init script as well, but this won’t work on TrueNAS HA systems and won’t work when you add a vdev until you reboot or manually execute the script. Having it be a cronjob that runs every day means that no matter what, the system won’t use swap space for more than 24 hours. The workload of this script is very minimal, so there’s no perceivable consequence to running this every day.

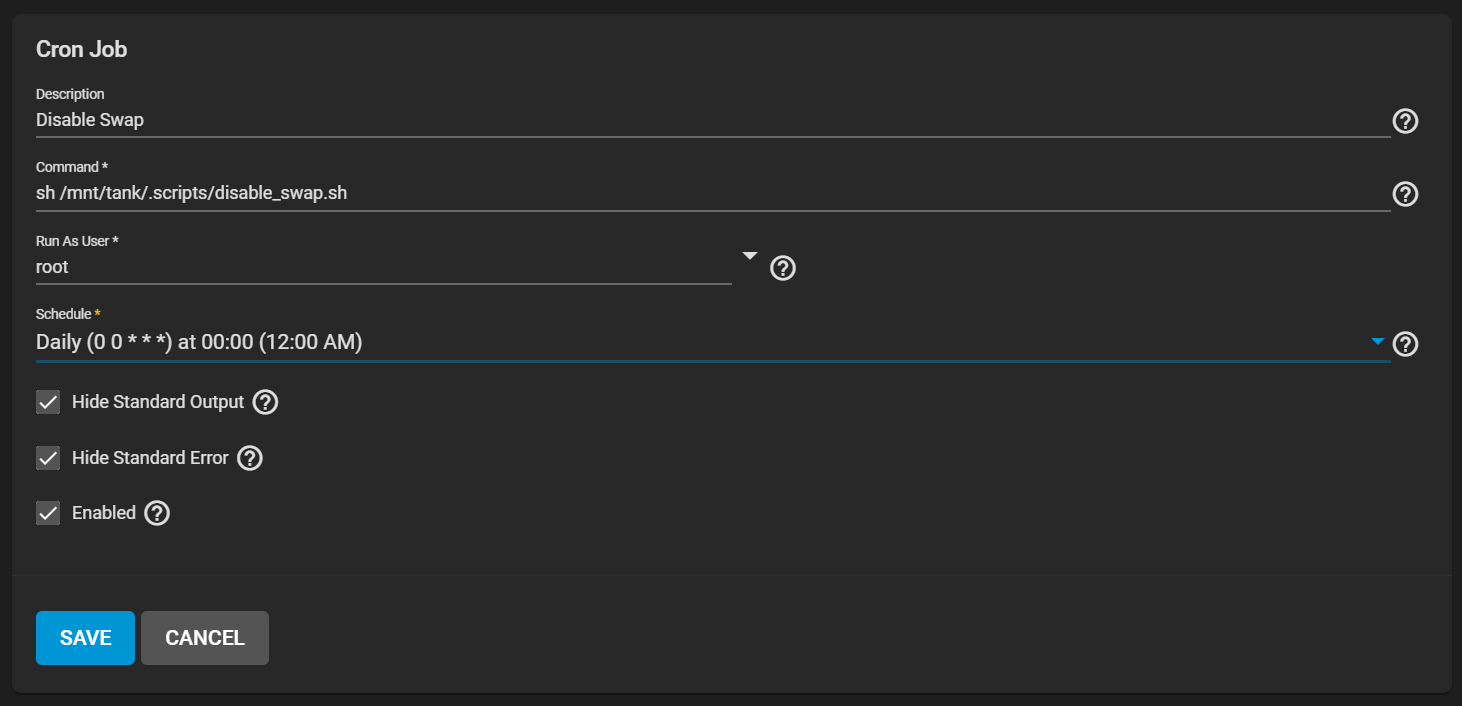

4. Setup the script to run every day as follows:

Under Tasks -> Init/Shutdown Scripts, click ADD.

Dyescription: Disable Swap

Command: sh /mnt/tank/.scripts/disable_swap.sh

Run as User: root

Schedule: Daily (0 0 * * *) at 00:00 (12:00AM)

Hide Standard Output: Checked

Hide Standard Error: Checked

Enabled: Checked

Save the new task.

If you want to test if all of this works, you can click the “run now” button for the task and then run the below command:

swapinfo

If you’ve done everything correctly, you should see no devices listed.

The important thing to remember is that for most people that have properly resourced the TrueNAS system, there is generally no swap space used. ZFS and your services should allocate and deallocate RAM as needed and the system will balance itself out.

I’ve seen plenty of systems with over 1500 days of uptime never use swap at all. I’ve also seen systems that have a memory leaking service running that had uptimes of just a few hours before things came crashing down.

If you are here because you are having performance issues, it is most likely bad judgement to assume you have a memory leak. Unless you have some kind of documentation saying a memory leak exists for your specific version of TrueNAS, or an expert has thoroughly investigated your system and determined that a memory leak does actually exist, then any problems you have probably aren’t because of a memory leak.

If the issue is that the system is simply overloaded, the solution is to either decrease the load on the system (possibly stagger your heavier workloads and cloud tasks), or add more RAM to the system. An L2ARC may help, but for reasons that are for another discussion, unless you have at least 128GB of RAM, you really shouldn’t be considering an L2ARC. RAM is not that expensive. Especially for business uses, 128GB of RAM is not an extravagant investment these days. I was able to put 1TB of ram in a server for less than $1000 recently.

I hope you found this educational and useful for your TrueNAS server.

If you have any questions, or would like to have me take a look at your system, feel free to click that “Contact Us” button in the top right corner and reach out.